Until recently, if you wanted to work on your professional development as IT worker, you only had to follow the certification path of your vendor. After all, it are the hardcore skills that will land you on your new job.

Certifications, combined with experience, are enough for a successful career. What more do you need? Of course, as your skills improve, you’re steering into the category where ‘consulting skills’ and soft skills are required. Good communication increases your effectiveness. We are all familiar with the examples of IT professionals who certainly make a valuable contribution to the team, but who you would rather not send to a customer. Fine craftsman, but keep him indoors.

But if you want to grow as a professional, how to do this? What if an extra certificate does not help you further in your career, but you encounter roadblocks of a different kind? For some of these, there are well-known selfhelp books, such as Getting Things Done or The seven habits of highly effective people. There are also many webcasts training programs aimed at social and communication skills. These can offer eye-openers, but they also have their limitations. They do not know your situation, do not know your company culture, your character, the local cultural chemistry, politics and hidden agendas. You have to sharpen your skills through osmosis and there’s no dialog at all.

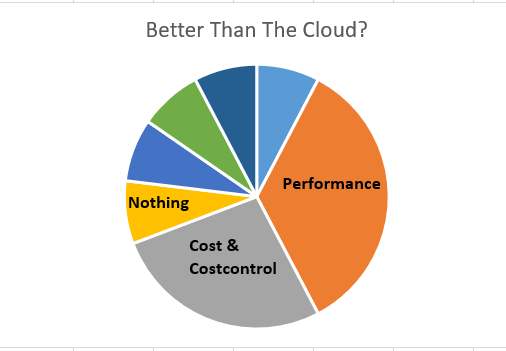

This problem has grown significantly in recent years, at least for me. With the cloud, many new technologies have come within reach, companies are being disrupted or trying to stay ahead of this with a digital transformation, and the role of the DBA is being redefined. These three developments have their own development speed at every company, and this can lead to substantial growing pains. Even within a company, people dwell on different parts of the hype cycle. This causes me substantial problems for me.

Personal guidance is indispensable, but how to obtain it? Asking for help and feedback from the actors around you can be difficult, especially when there’s a strong political environment, or when there simply aren’t available conversation partners available. A few leading IT people are trying to show us the way here. Brent Ozar has his Level 500 Guide to Career Internals. It’s a webcast, so the communication is still a one-way street. An other interesting offer came from Paul Randal: Personal Guidance by Email.

Having a well-informed discussion partner in this rapidly changing world is invaluable. When technical challenges are no longer real challenges and you have to determine your position in these volatile times, personal mentorship is the thing that can make the difference. Let’s hope more mentors will rise to this demand.