What are the top 3 mistakes I have seen during cloudmigrations?

I have worked for various companies that have entered a cloud migration project. What struck me is that, despite the reasons for going to the cloud differ per company, they seem to hit the same problems. Some of them overcame the issues, but others fell out of the cloud tree, hitting every branch on the way down.

So here is my top 3 of mistakes, from companies that went for a digital transformation to the ones going for a simple IAAS solution (if you allow me to label that as a cloudmigration for the post sake).

MISTAKE 1: The Big Bang Theory

Let start off with an important mistake: we’re going to the cloud without doing a pilot-project, we’re going Big Bang. It goes like this: The upper brass chooses a provider and sets up the migration plan. The opinion of their own technicians is skipped, because they wouldn’t agree anyway. Their jobs would be on the line, so their opinion can be discarded as biased. In one case, own staff was deemed inferior, as one manager told me: “We can’t win from players who play the Champions League”, referring to the cloud provider.

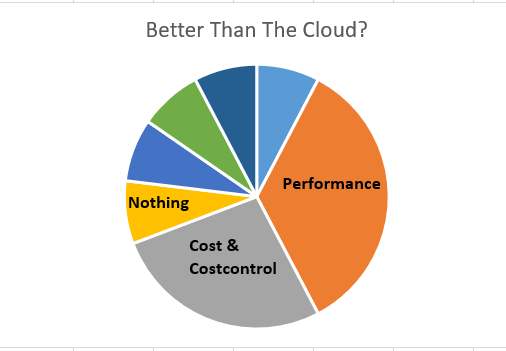

I’ve seen this run amok in several ways. With an IAAS migration I’ve seen twice that the performance was way inferior or the service was bad. ( many outages without good diagnostic capabilities with the IAAS provider). With cloudmigrations with the aim of a digital transformation it appeared that cloudtechnologies are not mainstream. Real expertise is under construction, mistakes will be made.

Lesson: One would like to make these kind of mistakes during a pilot project, not during a mass migration.

MISTAKE 2: The cloud is just an on-prem datacenter

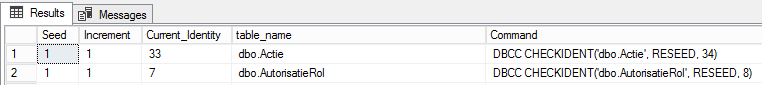

The cloud is a nice greenfield situation and promises a digital transformation unburdened by legacy systems. The services and machines in the cloud are new and riddled with extra bells and whistles. No more dull monolithic architecture, but with opportunities of microservice architecture. Gradually it is understood that the cloud entails a bit more that this. For example, how are the responsibilities reallocated among the staff, can the company switch to a scrum culture, how cloudsavvy are the architects and technicians? On that last point, I’ve seen an architect propose to have every application have its own database instance. Such a lack of understanding of the revenuemodel of a provider will make your design of the databaselandscape fall apart.

Lesson: A cloud transformation without a company transformation in terms of organisation, processes, methods, IT architecture and corporate culture is a ‘datacenter in the cloud’: new hat, same cowboy.

MISTAKE 3: The cloud fixes a bad IT department

Some situations are too sensitive to be discussed openly, so the next mistake I understood from people in whisper-mode: The quality of the own IT department doesn’t suffice and the brave new cloud will lead us to a better IT department. The changes are all good: On-premise datacenters are unnecessary, some jobs such as database engineers are not needed anymore, no more patch policies or outages. The cloud works as an automagical beautifier.

In short, managers who were unable to create a satisfactory IT department now have an opportunity to save the day with a cloudmigration. They can bring in a consultancy company to migrate to the new promised lands and climb the learning curve together before the budget runs out. The IT department used to be a trainwreck, but due to this controlled explosion called ‘Cloudmigration’ something beautiful will grow from the resulting wastelands. I leave it to your imagination what could go wrong here.

These are the top 3 mistakes I have seen in the field. Surely there are more nuanced issues, such as how cheap and safe the cloud really is, but that is subject I would like to discuss in a different post.